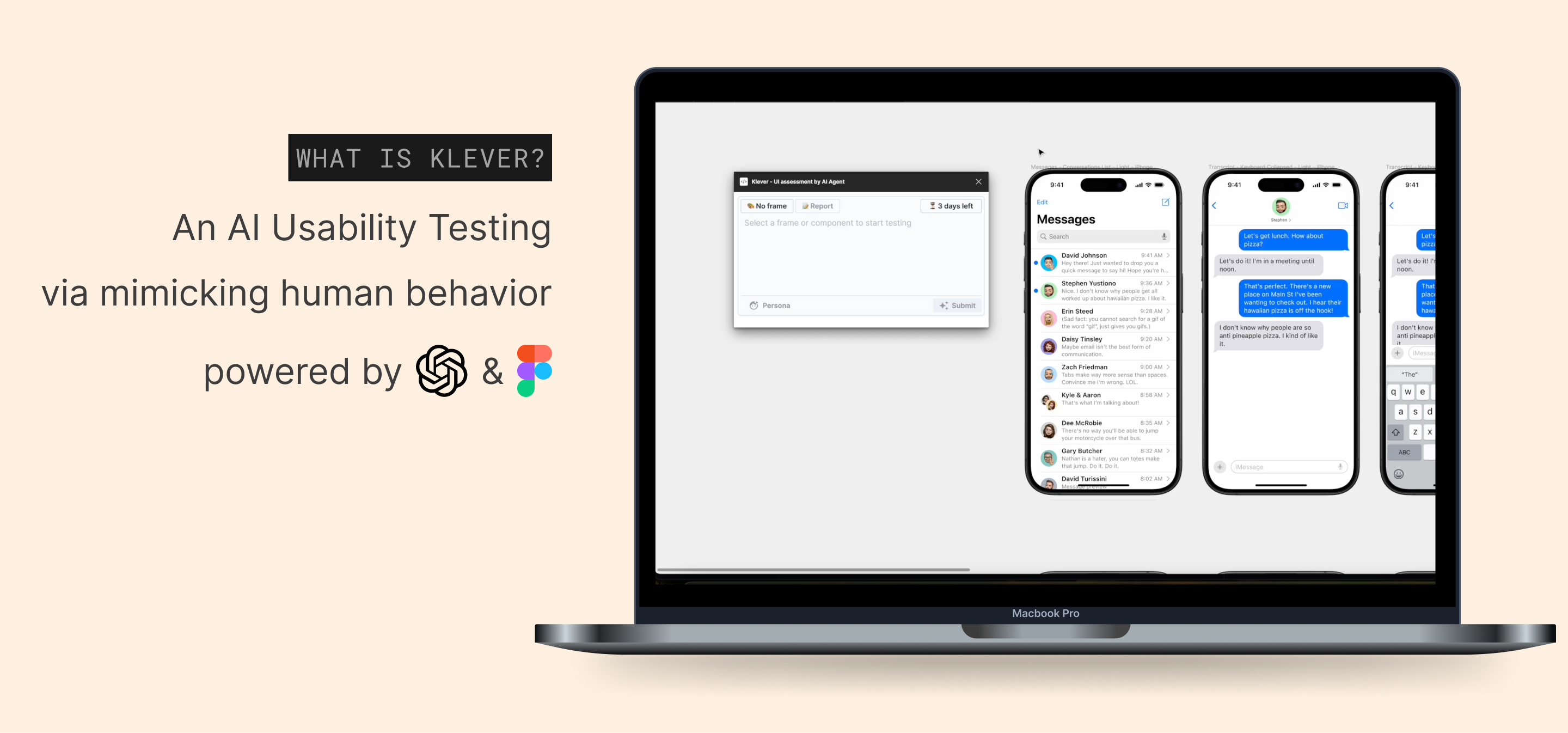

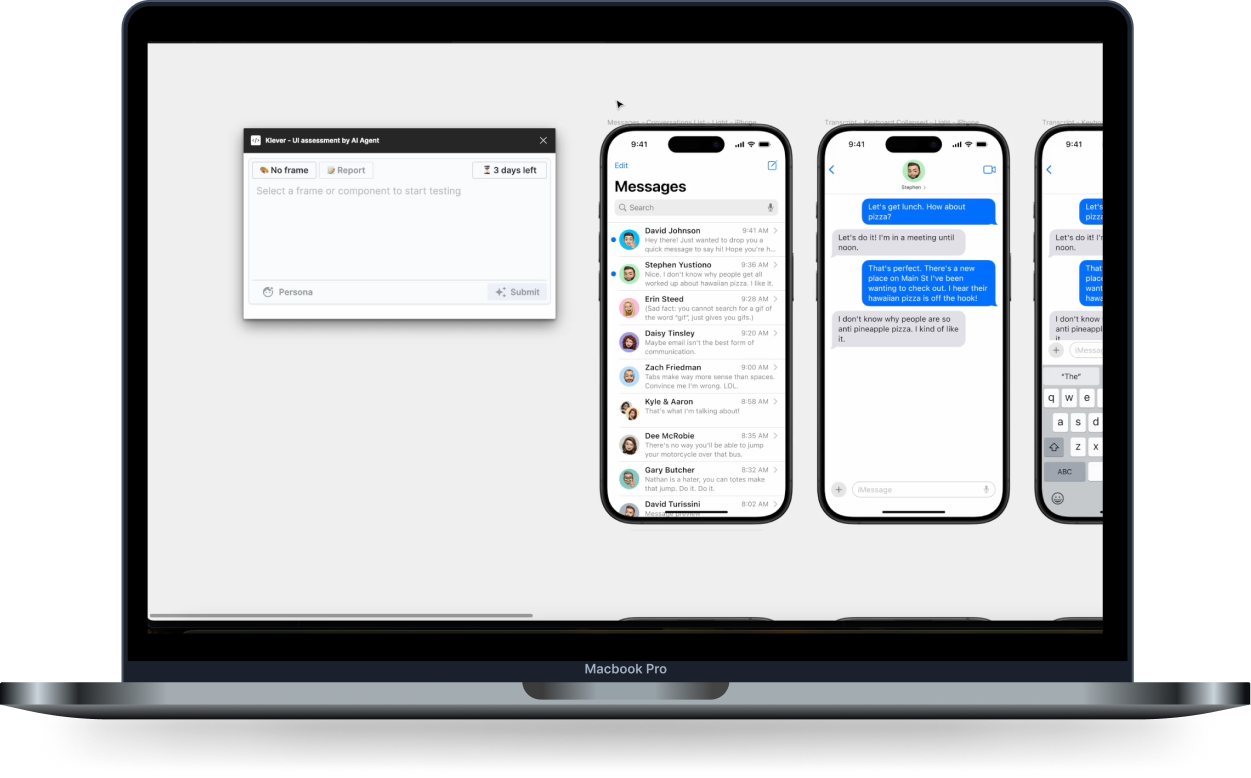

Introducing Klever

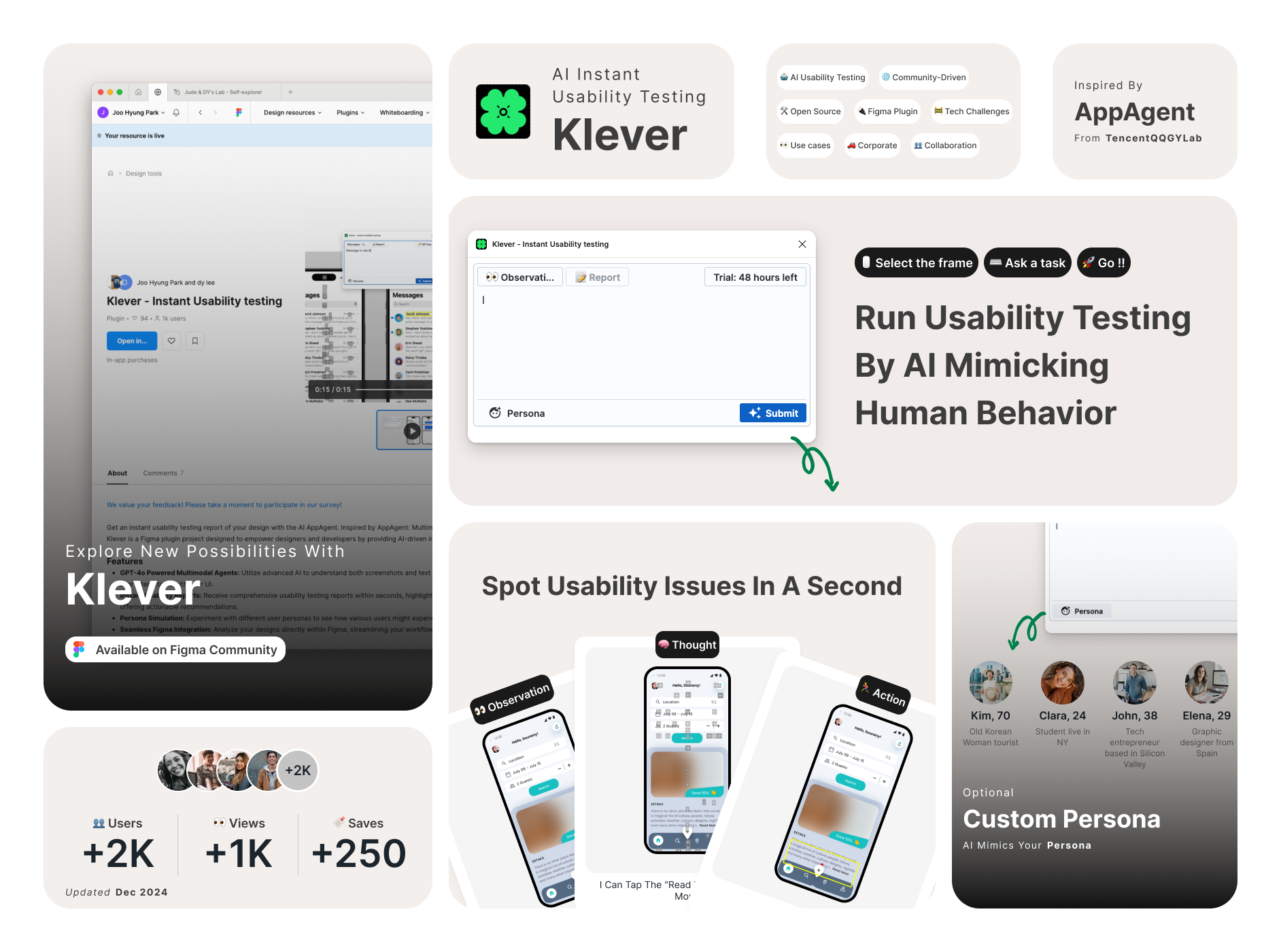

Klever is a Figma plugin that leverages AppAgent’s cutting-edge AI technology to provide designers with a seamless and intuitive usability testing experience. With Klever, designers can instantly gain valuable insights and enhance their design process—all within the familiar environment of Figma. Below is a summary of the core features and benefits of Klever.

Summary of Klever plugin features

This project was born out of a desire to address the evolving challenges faced by designers in an AI-driven world. In the following sections, we’ll explore the inspiration behind Klever, the journey of its development, and the impactful results it has achieved. Join us as we delve into the story of how Klever came to be and the innovative solutions it offers.

💡 The Ideation Journey

The Mirror of Your Mind (명심보감/明心寶鑑) is a book that encapsulates the wisdom of Eastern philosophy. It teaches that to live a truly human life, one must broaden their learning, maintain a sincere purpose, never lose curiosity, keep questioning, and solve problems step by step. These teachings resonate deeply with us, product designers, living in the 21st-century digital and AI age. After all, it’s crucial to maintain a positive mindset even in moments of change.

It’s another morning, and as sunlight spills through my window, I fire up my laptop. Slack is buzzing with AI news. Curiosity piqued, I click on a few. Watching these AI design tool demos, a chill runs down my spine — they’re doing what I do. It makes me wonder —

“Is AI really going to replace us designers?”

I’m not entirely sure, but all I know isI want to keep doing my best, today and tomorrow.

In a cozy nook of a Starbucks in western Singapore, two Grab designers, DY LEE and Jude Park, kicked off what was just a humble AI side project. It wasn’t about achieving something monumental; it was about confronting the genuine anxieties of diving into AI, maintaining a hopeful perspective, and exploring new avenues for growth in an AI-driven landscape.

Jan 2024: The Question

Can AI Enhance Design?

As we move through 2024, AI is everywhere, and it’s changing how we work in design. Every day, new AI tools and models are emerging, transforming the way we approach our work. We initially thought AI would take over the boring tasks, freeing us to focus on creativity. However, AI is now involved in everything from generating creative content to designing user interfaces, reshaping our processes obviously.

In light of these changes, we found ourselves asking:

How can we embrace the power of AI in our design process?

Beyond just automating tasks, what role can AI play in supporting us as designer assistants?

We didn’t find the perfect answer right away, but our exploration led us to a crucial realization: being a product designer is about more than just creating visual designs. It’s about identifying challenges, devising solutions, and deeply understanding user needs and behaviors.

Instead of letting AI dictate our design decisions, we began to explore how we could harness its power to strengthen our insights and gain a deeper understanding of user behavior. This shift in perspective opened up new possibilities, leading us to discover Tencent’s research on AppAgent - a finding that felt perfectly timed for our needs.

Feb 2024: The Discovery

Meeting AppAgent and the Eureka Moment

AppAgent: Multimodal Agents as Smartphone Users

appagent-official.github.ioTencent’s research team introduced AppAgent, a multi-modal agent capable of interacting with apps naturally. Using LLM and Android Studio, AppAgent demonstrates how AI can explore apps and use gestures like a human.

As designers, we found ourselves captivated by AppAgent’s remarkable ability to simulate user behavior. It was as if we were watching real users interact with an app, tapping and swiping their way through the interface. This AI-driven mimicry of human interaction not only sparked our curiosity but also opened our minds to new creative possibilities.

This led us to ponder an exciting question:

What new horizons could we explore by integrating AppAgent with our Figma designs?

The idea of leveraging AI-generated user flows and behaviors in our UX research was compelling. It promised a more efficient way to address user challenges and conduct iterative testing, allowing us to refine our designs with greater precision.

To bring this vision to life, we knew we needed insights from our peers. We reached out to fellow designers, eager to gather their perspectives and explore how AppAgent could revolutionize our design process.

Feb 2024: The Validation

Designer Interviews

We wanted to understand how AI & AppAgent could naturally fit into our design process, so we spoke with 10 designers across 7 teams over two weeks. Our goal was to uncover the challenges they face and see where AppAgent might offer solutions.

From these interviews, we uncovered three key insights:

⏳ Research is Time-Consuming User research often requires significant time and resources.

🤷♂️ Lack of Alternatives Designers struggle to find varied and effective alternatives when testing new concepts.

🚀 Potential for Instant Testing AppAgent could greatly improve the speed and quality of usability testing.

AI is not a magic wand that solves every problem. The key is understanding how AI can add real value to the design process. Through conversations with designers, we discovered the potential for AppAgent to positively transform the way we design.

Mar 2024: The Vision

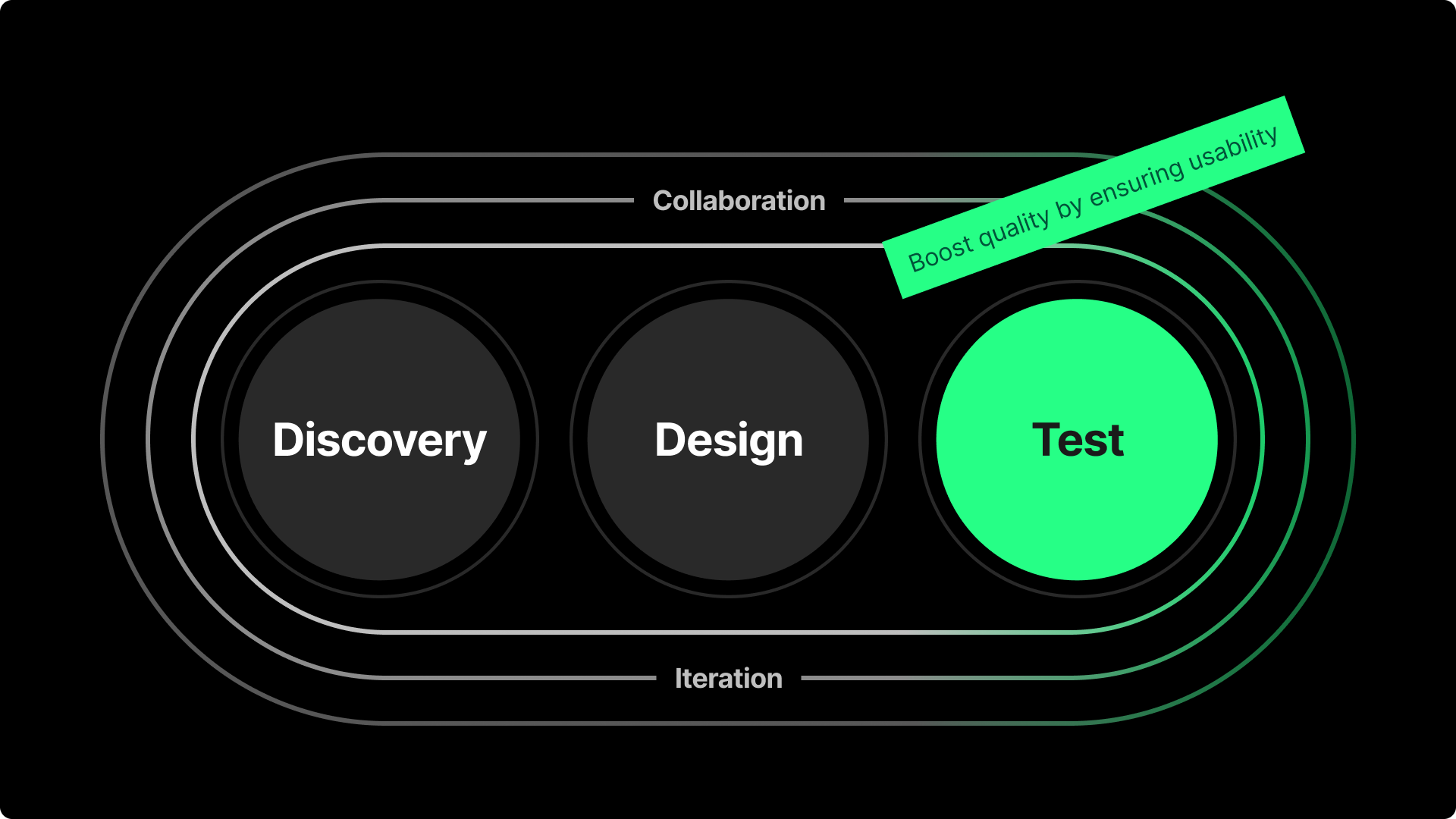

Instant Usability Testing

We decided to set out to create a seamless connection between design and testing with AppAgent. Typically, testing designs requires organizing user sessions and justifying the time and resources involved. Convincing engineers to test different versions can also be a hurdle. However, what designers really want to know is — “Is this design delivering a great user experience?”

By integrating AppAgent with Figma’s prototype screens, we envisioned a tool that could answer those kinds of questions more easily.

How might we let AI act as real users to help designers get insights without recruiting and interviewing?

What if AI could behave like a persona, exploring designs just as a human would?

AI instant usability testing, that’s our answer.

This isn’t just about passing or failing a QA test. It’s about using AI to mimic real user interactions, allowing it to navigate through designs and suggest optimal paths with minimal clicks, or to point out areas that need refinement. AppAgent performs heuristic evaluations on prototypes, delivering clear and actionable insights without requiring lengthy business justifications.

Our task now is to bring this concept to life.

🛠️ The Development Journey

Integrating AppAgent with Figma’s prototype feature presented several significant technical hurdles. As this started as a small side project by designers, expanding from an Android app to Figma required us to overcome multiple complex challenges.

Mar 2024: Understanding

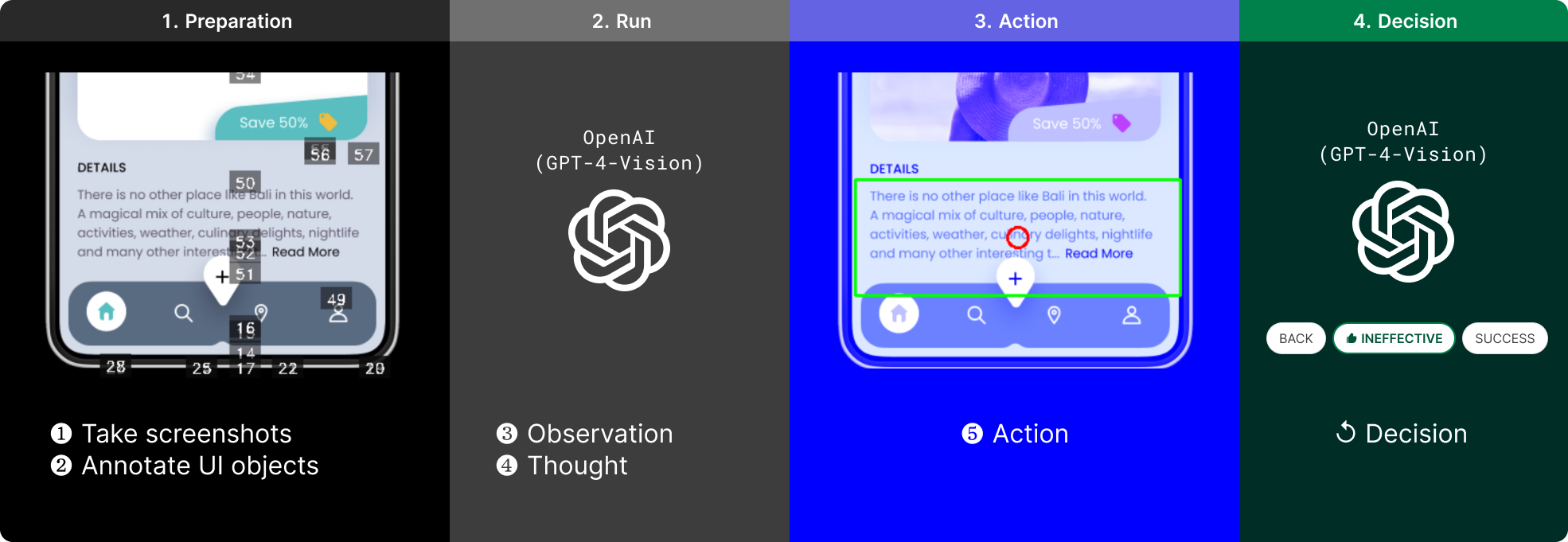

How AppAgent Works?

Our initial challenge was to grasp the fundamental workings of AppAgent. The original AppAgent operates in a cycle of Observation, Thought, and Action, iterating multiple times.

The original AppAgent operates: Observation → Thought → Action

AppAgent provides a screenshot with UI annotations and a task to the GPT-4-vision model, which observes, thinks, and suggests the next action. This process repeats, simulating user interaction.

To adapt this for Figma prototypes, we faced two critical challenges:

- Creating screenshot images and annotations for UI elements in Figma prototypes

- Implementing the AI model’s suggested actions within Figma prototypes

Understanding these core operations was essential to integrating AppAgent with Figma, setting the stage for our development journey.

Mar 2024: Experimenting #1

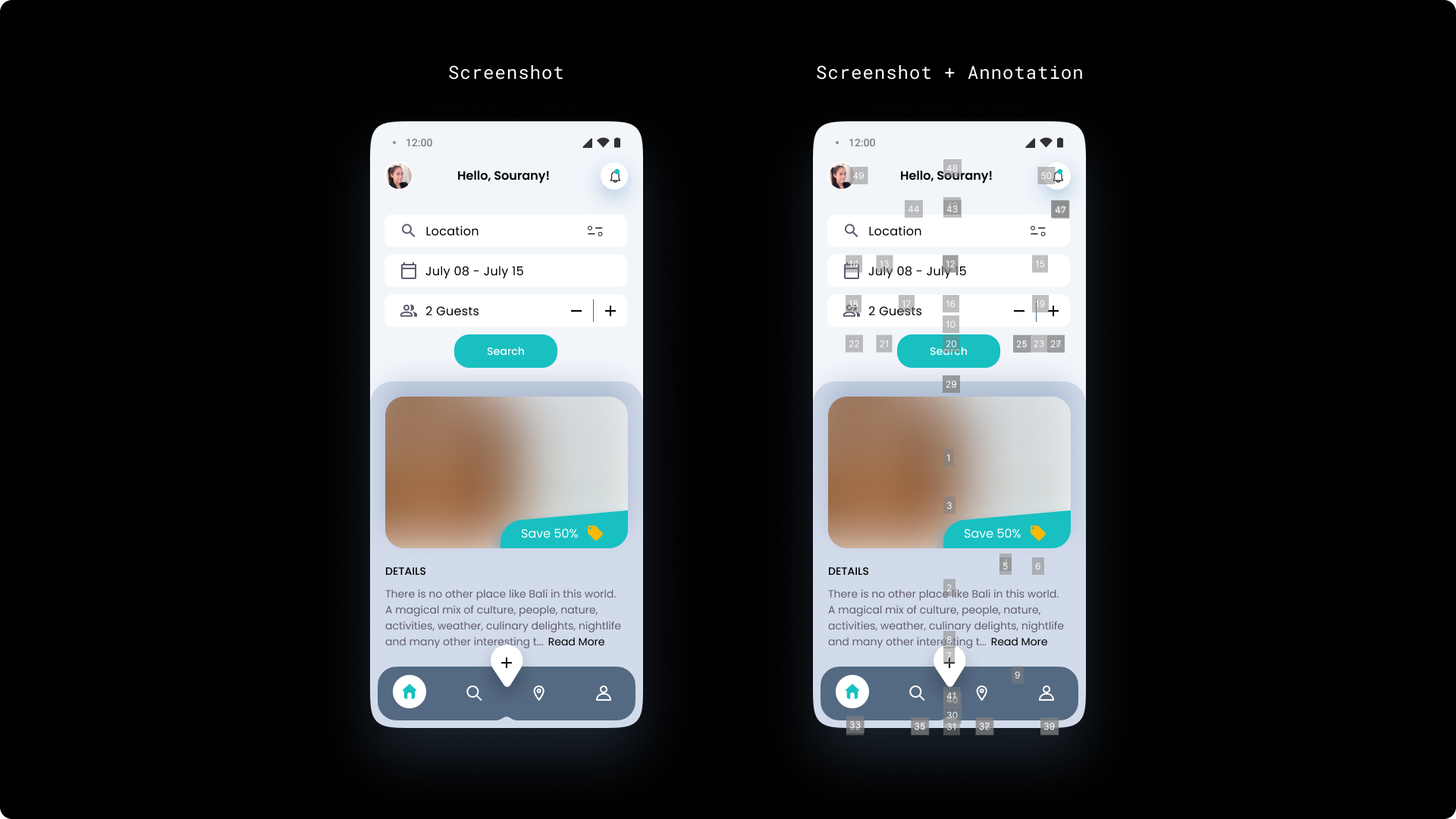

Generating UI Screenshots & Annotations

Creating screenshot images and annotations in Figma prototypes wasn’t too difficult. We used Figma’s REST API to fetch node data from Figma design, convert it to images, and generate screenshot images and annotations.

A sample screenshot vs A screenshot with UI object annotations

However, implementing the resulting actions in Figma prototypes proved challenging. This was because Figma prototypes render their designs on <Canvas> like PNG images, making it difficult to recognize and interact with just UI components.

Mar 2024: Experimenting #2

Implementing AI Actions in Figma

The solution was Selenium. As a tool for controlling web browsers and interacting with web page UI elements, we created a SeleniumController to control Figma prototypes at the web browser level.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

# AppAgent/scripts/figma_controller.py

class SeleniumController:

def __init__(self, url, password):

self.url = url

self.password = password

self.driver = None

def execute_selenium(self):

options = Options()

options.add_argument("user-data-dir=./User_Data")

options.add_argument("disable-blink-features=AutomationControlled")

options.add_argument("--start-maximized")

options.add_experimental_option("detach", True)

service = Service(ChromeDriverManager().install())

self.driver = webdriver.Chrome(service=service, options=options)

self.driver.get(self.url)

# ...

By structuring the SeleniumController similarly to the AndroidController, we enabled control over Figma prototypes. We integrated this with the existing logic to run it as a new option, distinct from the Android-based approach.

Mar 2024: Working Demo

🎉 Prototyped: AppAgent’s Integration with Figma

After several trials and errors, we successfully developed a new option where AppAgent controls Figma prototypes, recognizes UI elements, and interacts with them, as demonstrated in the YouTube video below.

Jun 2024: Evolutioning

From experiment to product, Klever was born

As we shared our prototype with the design community, we received enthusiastic responses about its potential. However, we also discovered a significant challenge: the Python development environment was a major barrier for many designers who wanted to try it out.

This feedback led us to make a BOLD decision. We decided to develop a Figma plugin version, transforming our experimental implementation of Tencent’s paper into a more accessible product for all product designers.

This evolution needed a new identity, and that’s how ‘Klever’ was born.

Klever is a Figma plugin that harnesses the power of AppAgent’s AI technology while offering a seamless, designer-friendly experience.

It empowers designers to conduct instant usability testing and gain valuable insights, all within Figma!

In developing the Klever plugin, we faced many technical challenges. The biggest challenge was how to implement AppAgent’s core functionality in the limited environment of a Figma plugin. Some notable challenges include:

- Transition to TypeScript

- Development of the UIs

- Utilizing Figma Plugin API

It wasn’t easy for us, designers, to code it to overcome these challenges as well, but we developed the Klever plugin while tackling these difficulties with Copilot. And through this, we made it possible to use AppAgent’s core functionality in Figma plugins as well.

🧠 Interesting Use Cases

As we applied AppAgent for Figma to several prototypes, we discovered some truly fascinating cases. These cases demonstrated that AppAgent could evolve beyond a simple lab model to become a design productivity tool capable of predicting actual user behavior and identifying potential design issues.

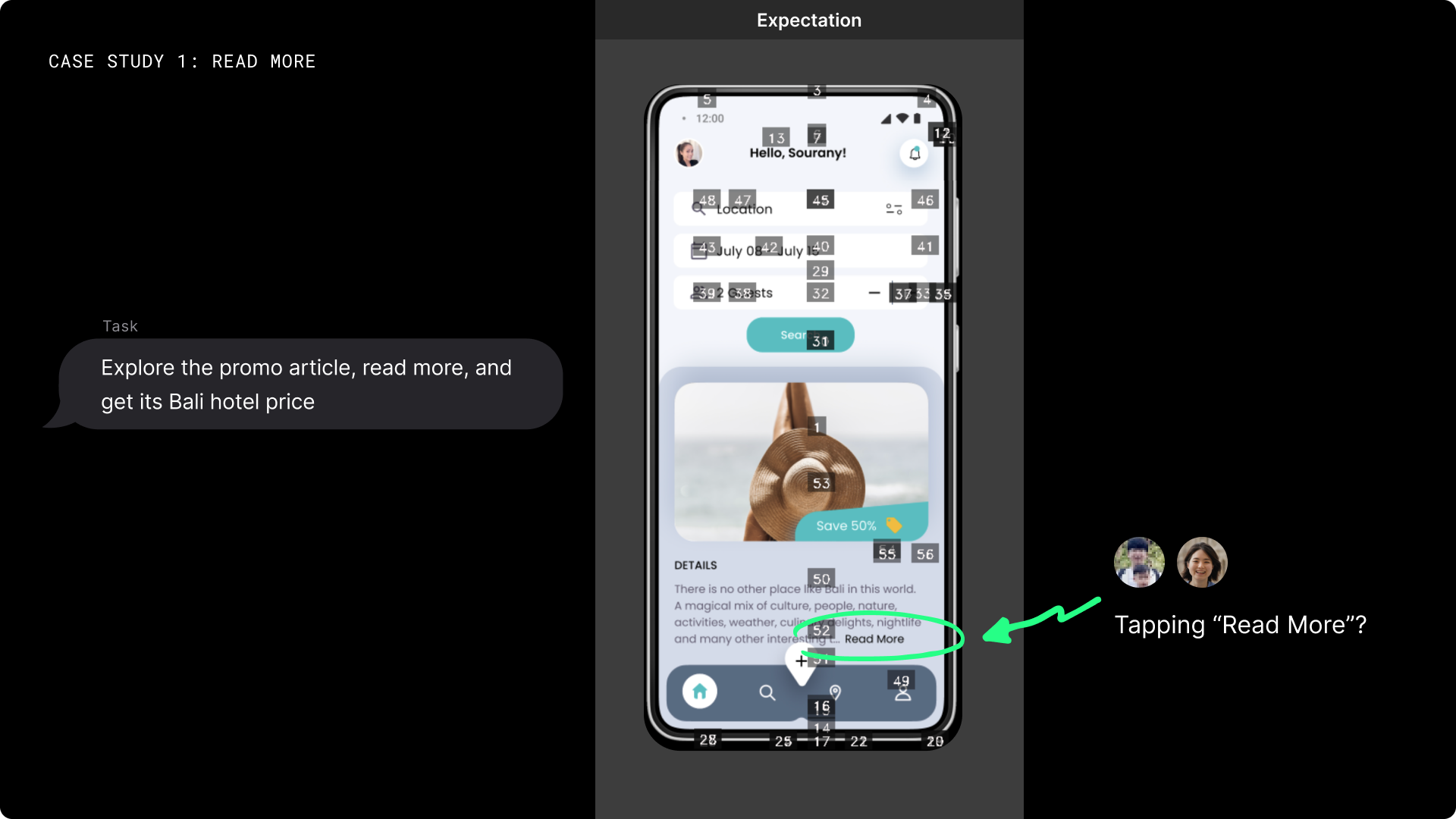

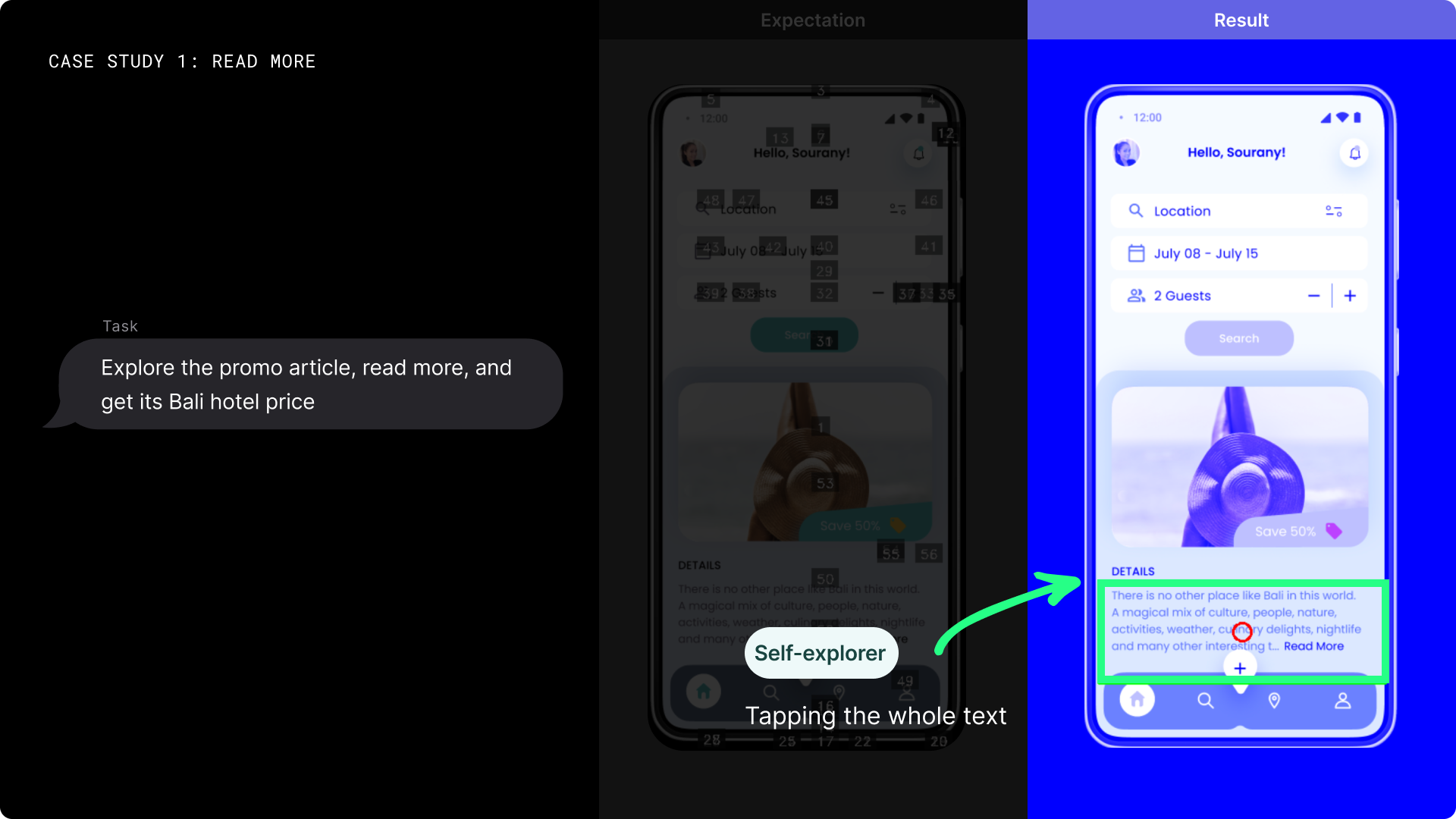

Use Case #1

Discovering Hidden Interactions

AI Agent found a prototype noodle that the designer missed.

In a travel app prototype, we asked the AI agent to check promotions and find the cheapest hotel in Bali. Contrary to our expectations, the AI agent tried to expand the article by clicking the center instead of pressing the ‘Read more’ button or the search button. This is a familiar UI pattern used in many apps, but it was mistakenly not connected in this prototype. The AI agent captured this important interaction that the designer had missed.

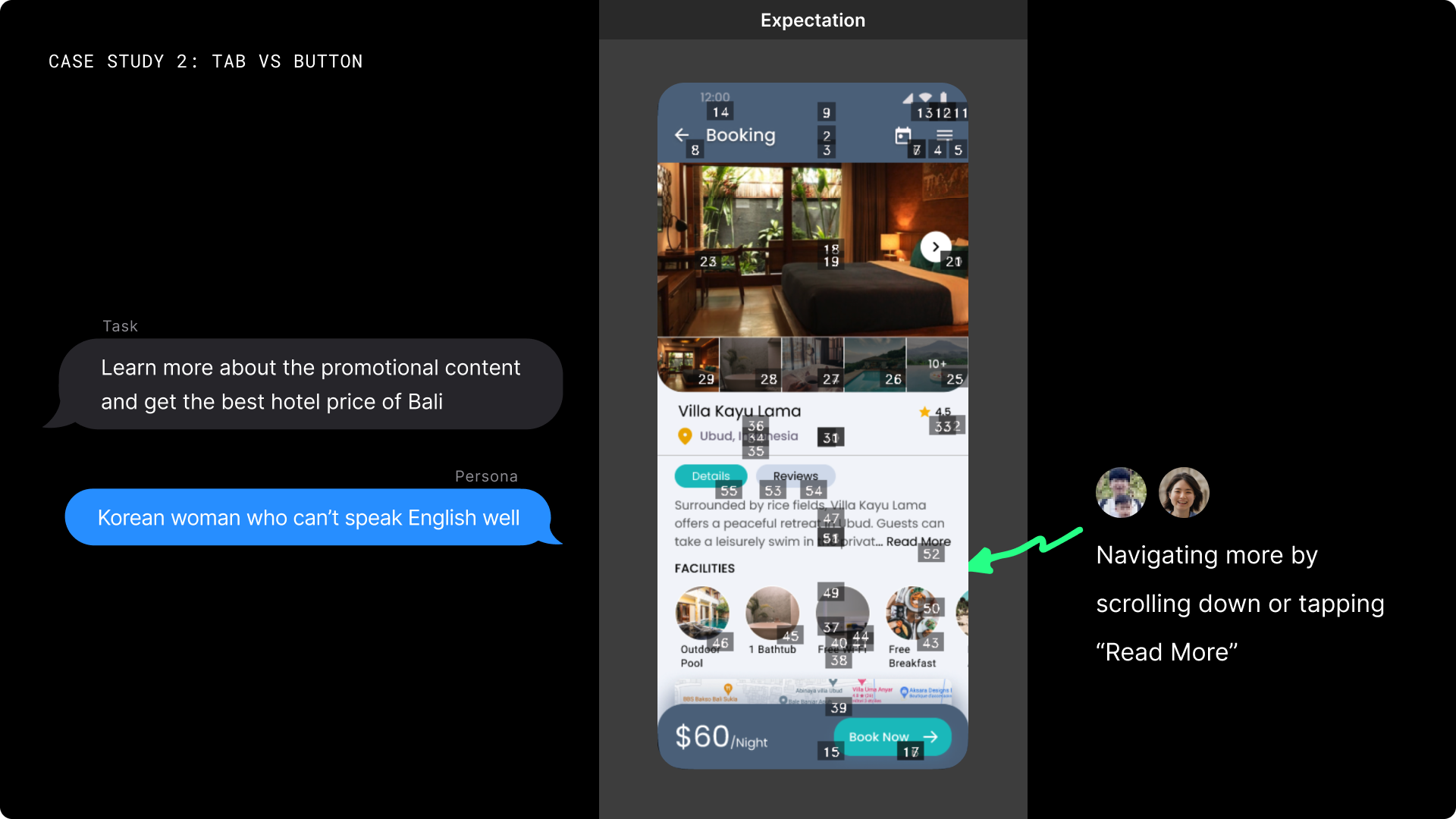

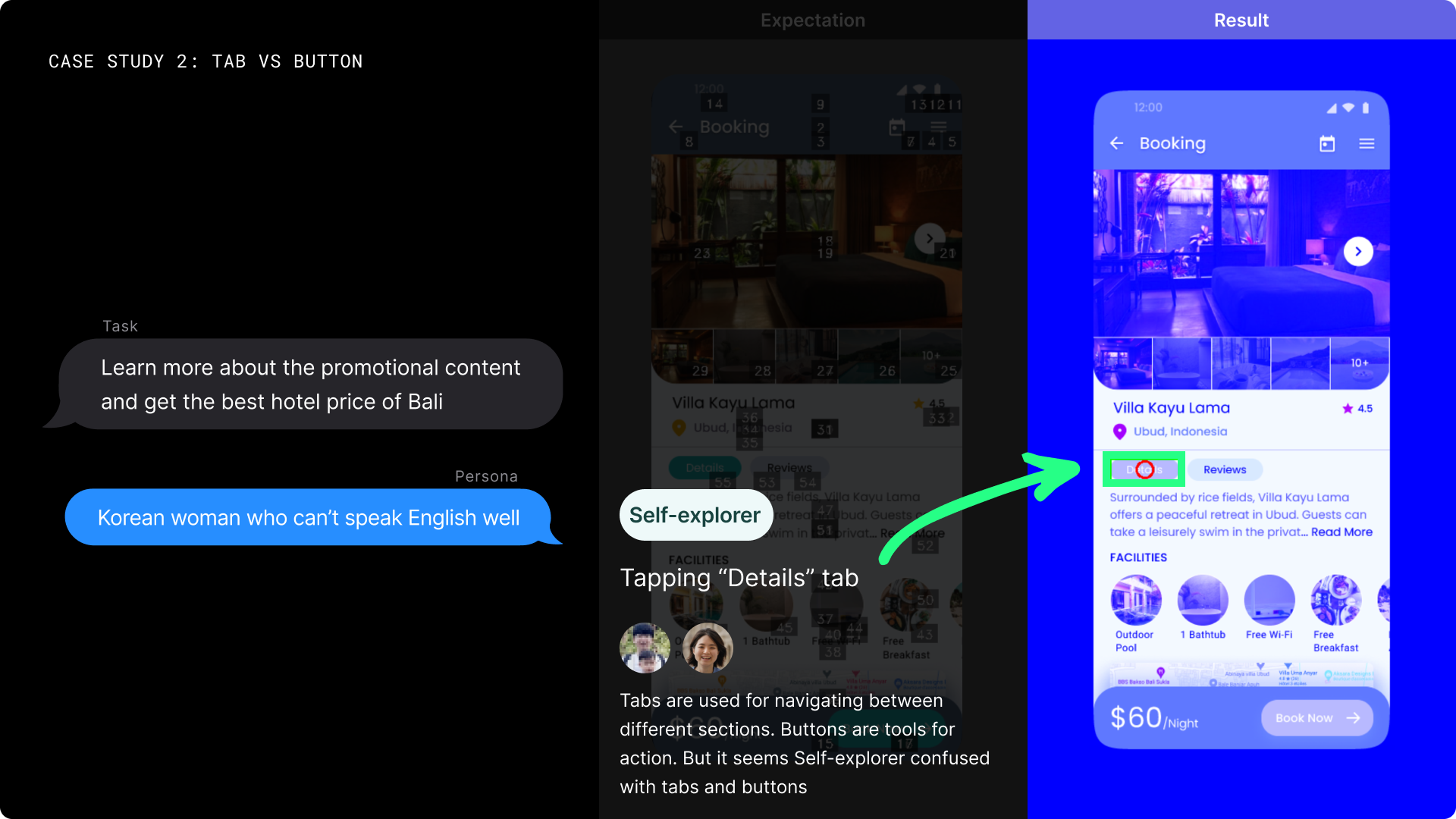

Use Case #2

Identifying UI Element Ambiguity

AI Agent discovered the confusing visual design of the UI components.

On the hotel details screen of the same prototype, we instructed the AI agent to find more information. We expected the AI to expand the article for ‘Read more’, but it repeatedly clicked the ‘Detail’ tab instead. This shows that the AI agent, like a person, confused tabs and buttons due to their similar visual designs. It highlights how the AI agent can spot visual design details that the designer might have overlooked.

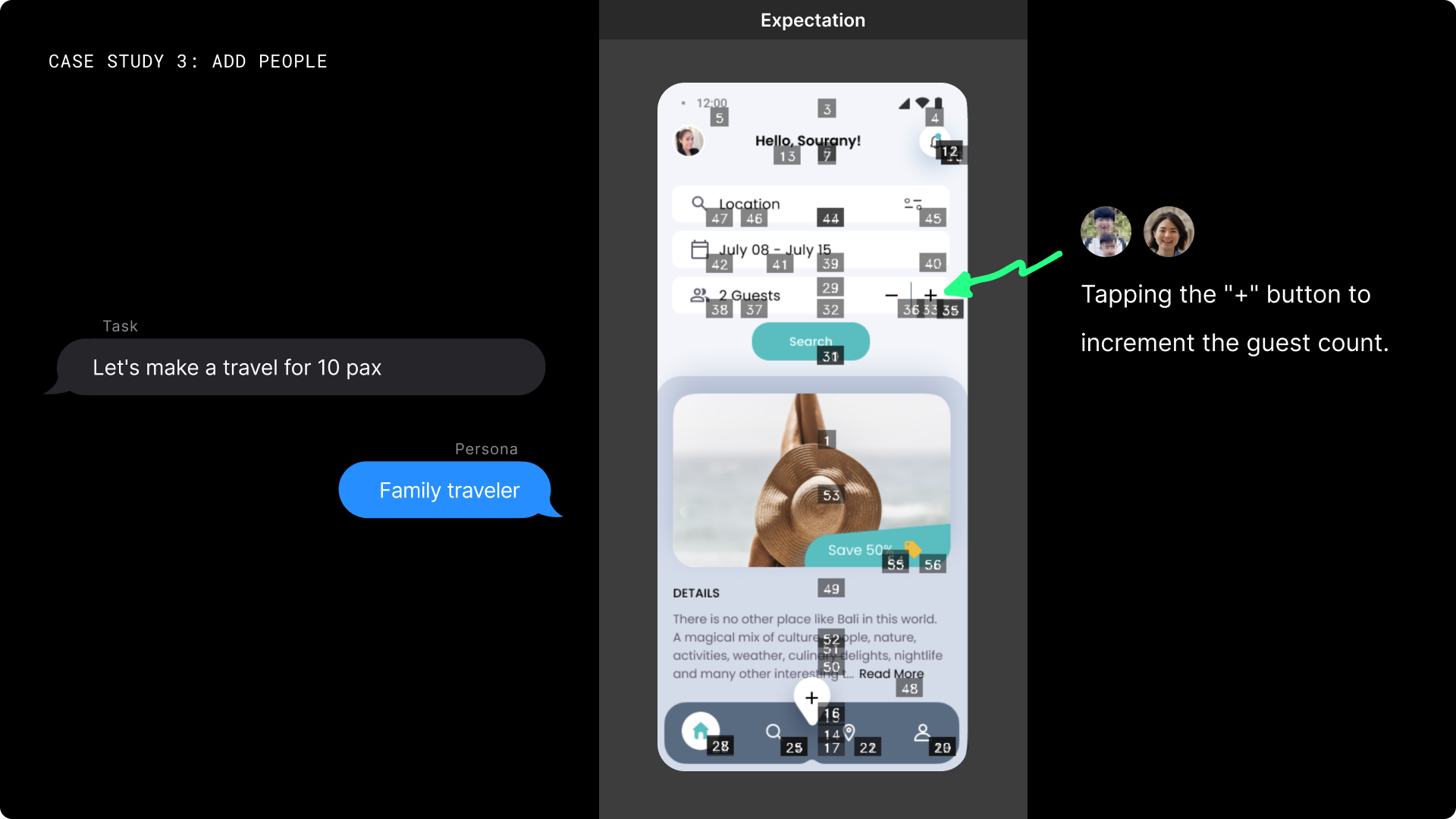

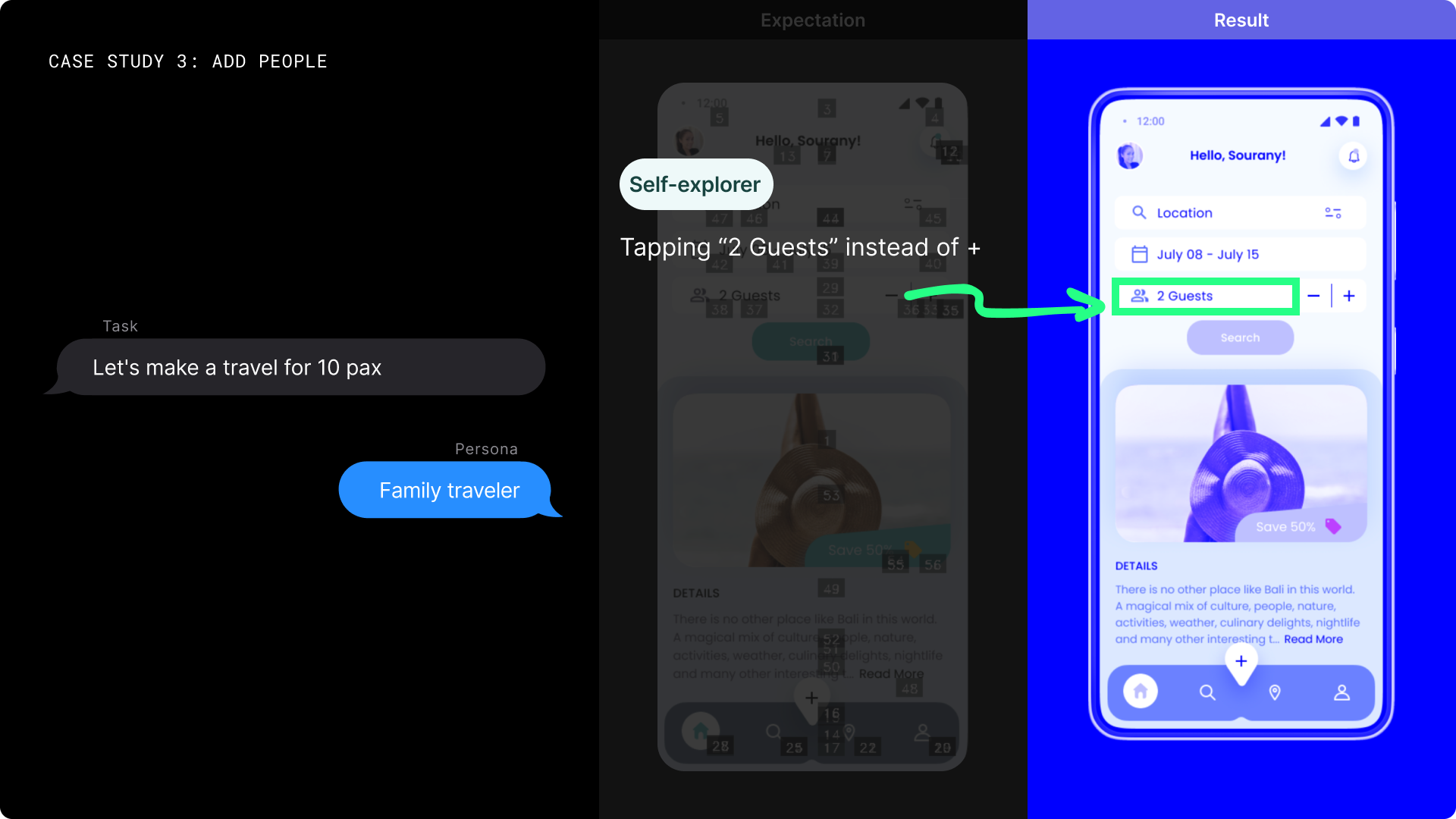

Use Case #3

Predicting Hidden Features

AI Agent predicted the next UI and performed the action.

AI Agent predicted the next UI and performed the action.

In a task to change the number of guests to 10, the AI agent clicked the ‘2 guests’ area instead of pressing the ‘+’ button. Despite being given only a single screen, the AI predicted that clicking this area would reveal additional options. This shows that the AI can understand and predict common UI patterns, not just process the given information.

These cases demonstrate that this AI-based usability testing agent can think and act like a real user, not just follow programmed rules. It provides insights that are as valuable as those from actual users. These moments were the most thrilling and fascinating for us as creators.

📈 Impact

Despite being a paid plugin (due to API costs), Klever has achieved large numbers in the Figma Community beyond our expectations, demonstrating strong demand for AI-powered usability testing tools:

It still keeps growing every week!

👀 2,093 Views

👥 1,076 Users

🔖 248 Saves

These numbers significantly exceed our expectations for a paid plugin, validating our vision of making AI usability testing more accessible to designers. The growing user base and engagement metrics demonstrate that there’s a real need in the design community for tools that can streamline the usability testing process while leveraging AI capabilities.

🌱 Growing with Community

The successfully developed Klever opened up numerous opportunities to share our journey with the broader design community. We actively shared our project’s journey and findings, believing that open collaboration leads to better solutions. From local meetups to major Tech conferences, each sharing session not only helped us gather valuable feedback but also inspired others to explore the possibilities of AI in design. This open approach led to various adaptations of our project, including an internal version at Grab. Here’s our community engagement timeline:

Jun 2024: Community Contributions #1

Meetups: Friends of Figma Seoul & Educators event

At the Friends of Figma Seoul & Educators event, we presented our session “Experimenting with user test automation with AI and Open Source”. The event, initially planned for 40 attendees, received an overwhelming 400+ registrations, highlighting the design community’s intense interest in AI. During our presentation, the audience’s enthusiastic response and spontaneous applause were truly encouraging, motivating us to develop this project further into a Figma plugin.

Want to learn more about our journey with AI and design? Check out the full session:

Watch Full Session on InflearnAug 2024: Community Contributions #2

Conference: InfCon 2024 Presentation

Shortly after releasing the Figma plugin, an unexpected opportunity came our way. Kim Ji-hong from Design Spectrum and Hong Yeon-ui from Inflearn, who had been following our project with interest, invited us to speak at InfCon 2024.

Most importantly, we extend our heartfelt thanks to the Korean designers who showed interest in our project and asked endless questions late into Friday evening. The support and energy we received from InfCon 2024 were truly uplifting.

InfCon24 recap: We never imagined the room would be packed for our 5 PM session on a Friday…

Thank you so much folks!

Jul 2024: Internal Adoption

From Side Project to Corporate Innovation

Encouraged by the community’s response, we took on our biggest challenge yet: convincing Grab’s design leadership to adopt our experimental project. From Tech Day presentations to leadership meetings, we delivered more than 6 different presentations and demos. By August, we had participated in over 20 stakeholder meetings, each one bringing us closer to our goal. After months of persistence and countless iterations, our dedication paid off.

This evolution from a side project to a corporate-backed initiative validates our vision of AI-powered usability testing and provides a platform for more ambitious experiments:

- Access to enterprise-level AI capabilities

- Ability to collect and analyze usage data at scale

- Opportunities to test with a larger designer base

While this corporate adoption was a significant milestone, we soon faced another challenge that would push us to think even bigger.

Sep 2024: Open source contributions

Challenges & Community Growth

As our project grew, we encountered a significant technical hurdle. The Klever plugin was limited to performing usability tests on only one screen, as Figma’s API constraints made it impossible to replace Selenium for controlling prototype flows.

My efforts to overcome Figma API limitations:

From meeting with Figma AI team to discussing with Dylan Field, Figma CEO

We went to great lengths to address these API limitations, even meeting with Figma’s AI team and eventually discussing our needs with Dylan Field, Figma’s CEO. Despite these high-level conversations and our persistent requests for new APIs, the technical constraints remained.

Rather than letting this limitation stop us, we saw an opportunity to expand our impact. We decided to open-source our project, believing that by sharing our challenges openly, we could not only find potential solutions but also inspire others to build upon our work. This decision to embrace open collaboration has already led to unexpected innovations and improvements.

A Figma plugin that allows you to do a simple usability test in Figma

github.com/FigmaAI/kleverAppAgent: Multimodal Agents as Smartphone Users

appagent-official.github.ioThe community’s response has been incredibly encouraging. Developers and designers from various backgrounds have started experimenting with our codebase, proposing creative solutions to overcome the current limitations. One particularly promising approach involves integrating the Figma plugin with a Python Flask server:

Prototype of the Figma plugin via integrating with Python Flask server approach (Code)

Download Klever Desktop App

Klever Desktop is now available on the stores below. Download and start your AI-powered usability testing journey today!

Lessons learned

This project started as a small side project for designers to learn AI while having fun. There were many challenges in the experimental process, but we received interest and encouragement from various designers and communities and motivated by all of that, we persistently repeated our experiments and eventually achieved the result of making it into a product.

In the era of AI, new AI tools are pouring out every day. Some may feel pressured to learn new things, Or some may feel a vague fear that AI will replace our design jobs. But what this project has taught us is that AI is fascinating and fun. If we learn while playing and work while learning, just like children playing with interesting toys, and improve the small inconveniences in our product design ecosystem with AI, AI will no longer be a difficult or scary entity.

We hope that these fun experiences we felt are well conveyed to all of you reading this article. So, enjoy a fun design experience with AI! 🤗